In Part 1 of this series, we explored the diverse applications and services that drive data centre design. Now we turn to how these requirements translate into physical and virtual infrastructure - the actual architecture that makes modern computing possible.

Physical vs Virtual Structure

Through virtualisation, data centre operators create the illusion of providing exactly what each customer wants, precisely when and where they want it.

Modern data centres exist in two parallel universes. The first is the tangible world — the physical servers, air conditioning units, and kilometres of cables. The second is a carefully constructed illusion: a virtual universe generated entirely by software running on those physical machines.

The virtual layer is essential: It bridges between the rigid constraints of physical hardware and the fluid demands of customers. The traditional model — where customers would own and manage their own servers — didn’t scale. Businesses can’t be constrained by what hardware is available in a particular location - their specific requirements must be met, in every corner of the globe, at any time of day or night. Through virtualisation, data centre operators create the illusion of providing exactly what each customer wants, precisely when and where they want it.

The underlying reality involves significant hardware inefficiency — a physical server might be running at only 20% capacity to guarantee that a customer’s virtual machine has the resources it needs. This inefficiency is sustainable only because data centre operators can charge many multiples of the cost of the underlying infrastructure.

Hyperscale cloud providers like AWS routinely achieve margins of 10x or higher (yielding a staggering operating margin of 39.5%), transforming wasteful resource allocation into a highly profitable business.

We’ll explore these business models and the tension between efficiency and performance in greater depth in Part 5. First, we’ll dive into the physical foundations and virtual abstractions that make this economic model possible.

Physical structure of a single data centre

The building is the outermost container of a data centre. Within this sit rows of standardised racks which are the organising structure for almost all equipment. Racks are divided into standard units known as “Us” (rack units), each measuring 1.75 inches in height and one of a few standard widths. Standard sizing enables equipment from different manufacturers to fit together.

The system is efficient and scalable because it is modular and standardised. Each rack accommodates a mixture of equipment: servers for computation, network switches and routers for connectivity, power distribution units (PDUs) for electrical supply, and cooling distribution equipment to manage thermal loads. Dimensional and operational standards ensure facility operators can purchase equipment in bulk, train maintenance staff on consistent procedures, and design cooling and power systems that can handle multiple generations of equipment.

Zooming in further, each server contains smaller, more specialised components. Power supply units (PSUs) convert rack-power into the direct current and voltages required by other components. Cooling systems — ranging from simple fans and heat sinks to sophisticated liquid cooling loops — manage the substantial heat generated by processors and storage devices. The motherboard serves as the central communication hub, connecting processors (CPUs, GPUs, specialised accelerators like FPGAs), memory modules (predominantly DDR RAM), and storage devices (SSDs, hard drives, occasionally tape systems for archival storage).

The connections between servers carry three resources: information and energy in the forms of electricity and heat. Data flows through copper or fibre optic cables connecting servers within racks, between racks, and ultimately to the wider internet. Electrical power flows through distribution systems that step down voltages from the grid supply to the requirements of individual components. In many modern data centres, cooling pipes carry chilled water or specialised coolants to transfer the heat away from equipment to the outside world (the air, rivers or the ocean).

Location is also critical to data centre design and economics, expanding on the considerations we discussed in Part 1. Physical proximity to users directly impacts latency — a data centre serving London’s financial markets will typically be located within the M25 to minimise network delays. But beyond user proximity, operators must consider several infrastructure requirements that vary by geography.

Water access has become increasingly important as power densities have risen. Modern AI training facilities can require tens of millions of litres of water annually for cooling systems, making locations near reliable water sources essential. Regions with cool climates — such as Iceland, northern Sweden, and parts of Canada — provide natural cooling advantages that can reduce operational costs.

Network connectivity requirements go beyond basic internet access. Major data centres require direct connections to internet service providers, international submarine cables, and internet exchange points (IXPs) to ensure redundancy and optimal routing. This typically constrains locations to major metropolitan areas or specific corridor regions with established telecommunications infrastructure.

Power grid stability and capacity represent another critical constraint. A large hyperscale data centre can consume 100-500 megawatts continuously — equivalent to a medium-sized city (2 million homes). This requires locations with robust electrical grids, proximity to power generation facilities, and often dedicated substations. The increasing focus on cheap (renewable) energy has driven data centre operators to prioritise locations with abundant clean power sources, explaining the concentration of facilities in regions like Virginia’s “Data Centre Alley” and Ireland’s technology corridor. However, Small Modular Reactors (SMRs) may be shifting the focus onto local nuclear power production for the next generation of AI-focused data centres. Of course, government incentives (tax, subsidies, etc.) have a large part part to play in this too.

Road and transportation access, while perhaps less obvious, prove essential for ongoing operations. Data centres require regular deliveries of replacement equipment, and major upgrades may involve moving equipment weighing several tonnes. Facilities must be accessible to large trucks and, in some cases, specialised transportation for oversized equipment deliveries.

Security concerns permeate every aspect of data centre design. Modern data centres implement layered security architectures that begin at the perimeter and extend to individual servers. External barriers typically include reinforced fencing, vehicle barriers designed to stop truck-based attacks, and landscape design that prevents concealed approaches to the facility.

The building envelope itself serves as a security barrier, with reinforced walls, minimal windows, and carefully controlled entry points. Many facilities employ mantrap entry systems — secured chambers requiring successful authentication at one door before the next can be accessed — to prevent tailgating and ensure individual accountability for facility access.

Interior security measures include biometric scanners, smart card systems, and comprehensive CCTV coverage with intelligent motion detection. Some facilities implement two-person integrity policies for accessing critical areas, similar to nuclear facilities. Equipment racks themselves may have individual locks, ensuring that only authorised personnel can access specific customers’ hardware.

Perhaps most critically, data centre security must address both external threats and insider risks. The concentration of valuable computing resources and sensitive data makes these facilities attractive targets for both cybercriminals and state-sponsored actors, while the privileged access requirements for maintenance staff create potential insider threat vectors that require careful monitoring and controls.

To see for yourself what a data centre looks like, there is a (pretty entertaining) tour by Linus Tech Tips of one of Equinix’s Tier 1 data centres in Canada.

What a rack and unit-chassis looks like

The physical structure of data centre equipment begins with the standardised rack system. A standard 19-inch-wide rack stands approximately 2.1 metres tall and contains 42 vertical rack units.

Each piece of equipment in a rack occupies between one and several rack units of vertical space. A 1U server — the most common configuration for web applications and general computing workloads — measures just 1.75 inches tall. These pizza-box-sized units slide into the rack on mounting rails, allowing technicians to pull them out for maintenance without disconnecting power or network cables.

Moving up the scale, 2U servers provide additional space for more powerful processors, extra memory slots, and enhanced cooling systems. These are frequently used for database servers and computational workloads requiring substantial resources. At the upper end, 4U servers can accommodate multiple processors, dozens of memory modules, and extensive storage arrays — though they consume precious rack space that operators prefer to maximise.

The benefit of this standardisation becomes apparent when you observe a fully populated rack. Servers from different manufacturers — perhaps Dell PowerEdge alongside HPE ProLiant and Supermicro units — mount seamlessly together. The interchangeability enables data centre operators to select equipment based purely on performance and cost considerations rather than compatibility constraints.

The interior layout of a typical server is highly optimised. A typical motherboard has dual CPU sockets positioned for optimal thermal management alongside banks of memory slots that can accommodate hundreds of gigabytes of error-correcting memory. Front-accessible drive bays allow technicians to replace failed storage devices without powering down the system, while redundant power supplies ensure continued operation if one fails.

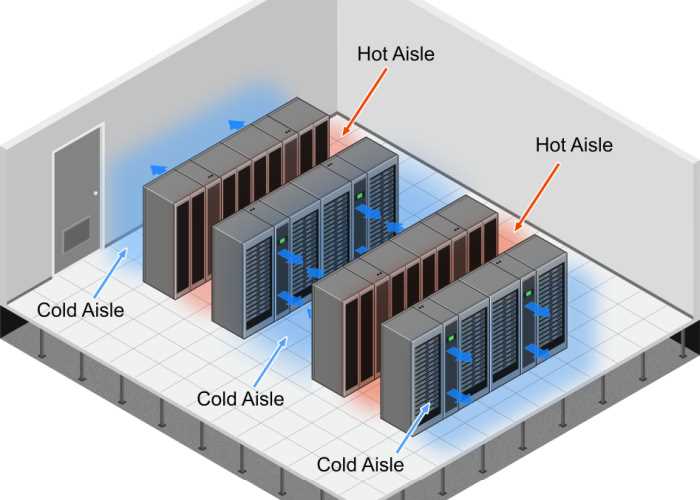

Cable management within racks requires systematic organisation to maintain airflow and enable maintenance access. Structured cabling systems route power connections along dedicated pathways separate from network cables, reducing electromagnetic interference and ensuring safety during maintenance. Many modern racks implement hot-aisle/cold-aisle configurations where servers draw cool air from one side and exhaust heated air toward the opposite side, enabling more efficient facility-wide cooling systems.

The evolution toward higher-density computing has driven the development of blade servers and microservers that pack even more computational units into standard rack space. HP’s BladeSystem and Dell’s PowerEdge VRTX exemplify this approach, fitting dozens of individual server modules into chassis that would traditionally house just a few standard servers.

However, this density comes with substantial challenges. Higher component density generates more heat within the same rack space, requiring enhanced cooling systems and more sophisticated thermal management. Power distribution must also be redesigned — a fully populated blade chassis can consume several tens of kilowatts continuously, requiring robust electrical infrastructure that many traditional data centres were not designed to support.

Compute

The computational heart of any data centre lies in its processors — the silicon chips that execute the billions of calculations required to serve websites, process databases, train AI models, and run the countless applications that power modern digital infrastructure.

However, the term “processor” itself has evolved far beyond the traditional CPU as data centres embrace increasingly specialised and heterogeneous computing architectures.

Processors

Central Processing Units (CPUs) remain the foundation of data centre computing, handling the sequential logic, control flow, and general-purpose computation that characterises most applications. Modern server CPUs like Intel’s Xeon Scalable processors and AMD’s EPYC series pack dozens of cores into single packages, enabling simultaneous execution of hundreds of threads. These enterprise processors differ significantly from consumer variants, incorporating features like error-correcting code (ECC) memory support, advanced virtualisation extensions, and enhanced security features including hardware-based trusted execution environments.

The architecture of these processors reflects the diverse demands of data centre workloads. Multiple levels of cache memory — from ultra-fast L1 caches measured in kilobytes to massive L3 caches extending into megabytes — ensure that frequently accessed data is quickly accessible to the processing cores. Advanced features like Intel’s AVX-512 vector extensions accelerate mathematical computations common in scientific and AI workloads, while sophisticated branch prediction and out-of-order execution maintain high performance across the unpredictable control flows typical of web applications.

Discrete accelerators represent the most significant shift in data centre computing over the past decade. Graphics Processing Units (GPUs) have become essential for AI training and inference due to their ability to perform thousands of parallel mathematical operations simultaneously. NVIDIA’s H100 and AMD’s MI300X dominate the AI acceleration market, with individual chips containing thousands of processing cores optimised for the matrix multiplications central to neural network computation.

Network accelerators like Intel’s IPU (Infrastructure Processing Unit) and NVIDIA’s BlueField DPUs (Data Processing Units) offload network packet processing, encryption, and storage protocols from main CPUs. These specialised processors can handle millions of network packets per second while implementing complex security and quality-of-service policies, freeing main processors to focus on application logic.

Field-Programmable Gate Arrays (FPGAs) provide ultimate flexibility for highly specialised workloads. Companies like Xilinx (now part of AMD) and Altera (bought in 2015 and recently sold by Intel) produce chips that can be reconfigured for specific algorithms, enabling ultra-low-latency financial trading, real-time video processing, and custom AI inference pipelines. Major cloud providers including AWS, Microsoft Azure, and Alibaba Cloud offer FPGA-enabled instances for customers requiring this level of customisation.

Integrated accelerators represent an emerging trend toward incorporating specialised functionality directly into main processors. Intel’s Xeon processors now include AI acceleration units, cryptographic engines, and advanced networking features on the same silicon die as traditional CPU cores. This integration reduces power consumption and latency compared to discrete accelerators while providing performance benefits for common workloads.

ARM-based processors are gaining significant traction in data centres, driven initially by their power efficiency advantages (though arguably customer decision making is driven by secondary effects: performance-per-watt and thermal efficiency). Amazon’s Graviton processors, Ampere Computing’s Altra, and NVIDIA’s Grace demonstrate that ARM architectures can deliver competitive performance for many data centre workloads while consuming substantially less power than traditional x86 processors. This shift becomes particularly important as data centres grapple with cooling constraints.

Memory

Memory design balances capacity, speed, and reliability requirements that far exceed consumer computing. The memory hierarchy extends from ultra-fast processor caches to massive pools of system RAM, each optimised for different aspects of computational performance.

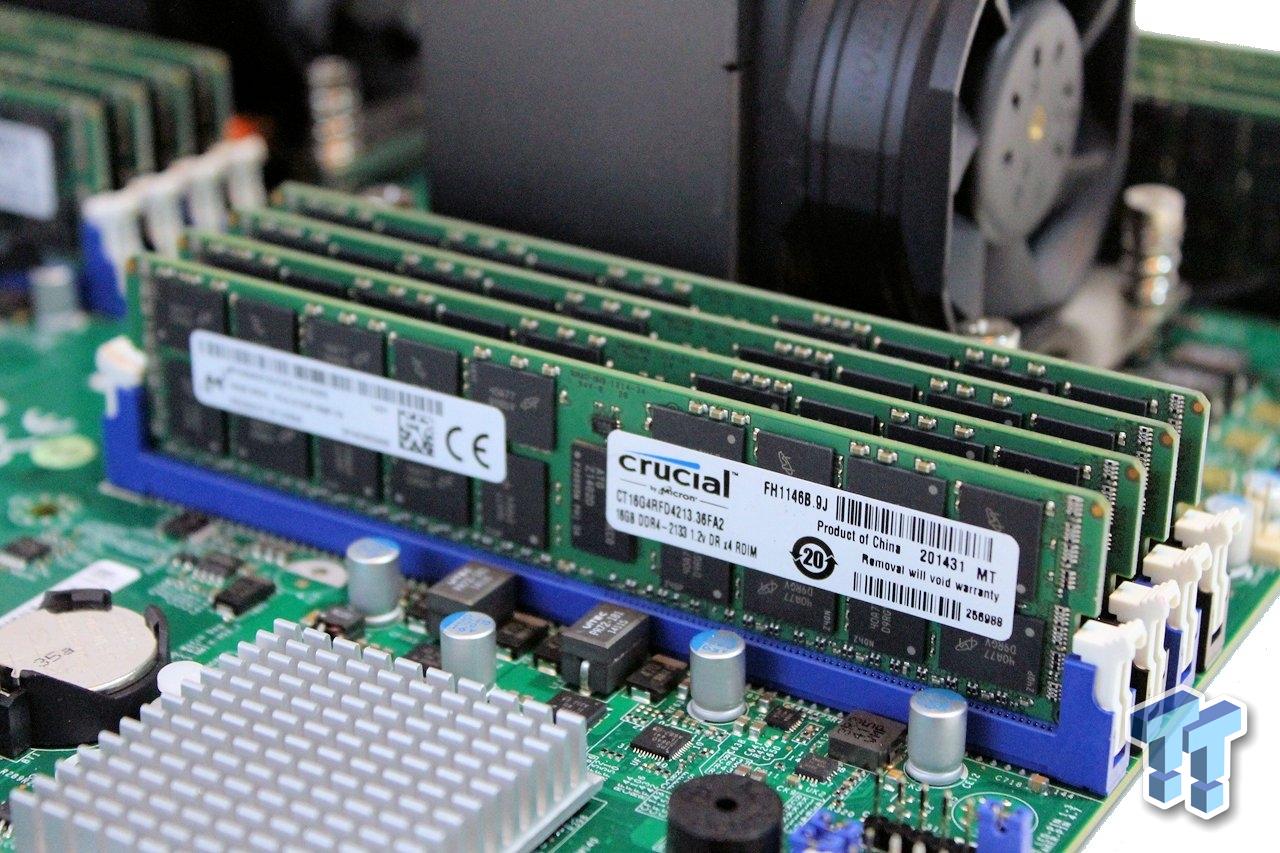

DDR (Double Data Rate) DRAM (Dynamic RAM) serves as the primary system memory for most data centre applications. Modern servers typically use DDR4 or the newer DDR5 standards, with individual modules ranging from 16GB to 128GB and servers supporting total capacities measured in terabytes. Enterprise DDR memory incorporates error-correcting code (ECC) technology that can detect and automatically correct single-bit errors, preventing data corruption that could cascade through applications and databases.

The organisation of memory within servers affects both performance and reliability. Multi-channel memory controllers enable parallel access to multiple memory modules simultaneously, increasing aggregate bandwidth for memory-intensive applications. Advanced servers implement memory mirroring and memory sparing features that provide redundancy against memory module failures, ensuring continued operation even when individual components fail.

SRAM (Static RAM) appears primarily in processor caches, where its extremely fast access times enable processors to maintain high performance despite the relative slowness of main memory. Modern server processors include multiple megabytes of SRAM distributed across L1, L2, and L3 cache levels, with sophisticated algorithms managing which data remains in these precious fast-access areas.

Non-volatile memory technologies bridge the gap between traditional RAM and storage, providing persistent data storage with access speeds approaching conventional memory. Intel’s Optane (now discontinued) and emerging technologies like Storage Class Memory enable new architectural approaches where frequently accessed data can persist across server reboots while maintaining near-memory performance characteristics.

Advanced memory architectures are evolving to address the specific requirements of AI and high-performance computing workloads. High Bandwidth Memory (HBM) provides dramatically higher memory bandwidth by stacking memory on a single chip, directly integrated with processor logic, using advanced packaging techniques. AI accelerators like NVIDIA’s H100 incorporate HBM3 providing a terabyte per second of memory bandwidth — more than 40 times higher than conventional DDR4 memory systems.

The emergence of memory pooling technologies allows multiple processors to share access to large pools of memory over high-speed interconnects. This disaggregation of memory from processing enables more flexible resource allocation and improved utilisation of expensive memory resources across entire data centre facilities. In practice, these memory pool servers look more like high-speed storage devices than conventional RAM memory, and are frequently used for in-memory databases like Redis and Memcached.

Storage

Storage systems balance cost, capacity, performance, and reliability across multiple tiers of technology. Unlike consumer storage, which prioritises simplicity and cost, enterprise storage must handle massive concurrent workloads whilst ensuring data durability across years or decades of continuous operation.

Connection Types and Interfaces

The physical connections between storage devices and servers determine the performance characteristics and deployment flexibility. PCIe has emerged as the highest-performance connection standard, with modern PCIe interfaces providing enormous bandwidth for directly attached storage devices, though the number of such connections per server is limited.

SATA remains prevalent for high-capacity storage applications where cost per gigabyte matters more than ultimate performance. SATA 3.0 supports transfer rates of up to 6 Gbps, making it suitable for traditional spinning hard drives and lower-performance solid-state drives. The simplicity and ubiquity of SATA interfaces enable cost-effective storage expansion, particularly for applications requiring massive capacity like backup systems and content archives.

M.2 represents a compact form factor that typically uses the NVMe protocol over PCIe connections, enabling high-performance storage in space-constrained environments. M.2 devices can be as small as a stick of chewing gum whilst providing performance comparable to full-sized PCIe cards, making them particularly attractive for dense server configurations.

Storage Technologies

Solid State Drives (SSDs) using NVMe (Non-Volatile Memory Express) protocols deliver the highest performance available in contemporary data centres. Enterprise NVMe SSDs like Samsung’s PM9A3 and Intel’s D7-P5510 provide random access performance measured in hundreds of thousands of input/output operations per second (IOPS), enabling databases and applications to process transactions with minimal latency. These drives use sophisticated wear-leveling algorithms and over-provisioning to ensure longevity despite the limited write endurance of flash memory cells.

The emergence of different NAND flash technologies creates storage tiers within the SSD category itself. Single-Level Cell (SLC) provides the highest performance and endurance but at substantial cost premiums. Multi-Level Cell (MLC) technologies offer increasing capacity and lower costs at the expense of write endurance and performance.

Hard Disk Drives (HDDs) continue to play essential roles in data centres. Enterprise drives like Seagate’s Exos and Western Digital’s Ultrastar series provide capacities exceeding 20TB whilst maintaining reliability measured in millions of hours for mean time between failures. For applications requiring massive capacity — AI models, video archives, scientific datasets, backup systems — HDDs deliver unmatched cost effectiveness. Modern enterprise HDDs incorporate advanced features like helium-filled enclosures that reduce drag on spinning disks, enabling lower power consumption.

Tape drives remain relevant for long-term archival storage despite appearing antiquated compared to other technologies. IBM’s LTO (Linear Tape-Open) technology provides enormous capacity (up to 18TB uncompressed for LTO-9) at extremely low cost per gigabyte for data that must be retained for decades. The sequential nature of tape access makes it unsuitable for interactive applications, but its offline storage capability provides long-term resiliency.

Operational Characteristics

Hot swapping capabilities enable storage devices to be replaced without powering down servers or interrupting applications. Enterprise storage bays incorporate sophisticated connectors and power management systems that allow failed drives to be removed and replaced whilst the system continues operating. This capability becomes essential in 24/7 data centre environments where planned downtime for maintenance can cost thousands of pounds per minute in lost revenue.

The relationship between storage capacity, performance, and cost creates complex trade-offs that drive multi-tiered storage architectures. Ultra-high-performance NVMe SSDs might cost £1-2 per gigabyte whilst providing hundreds of thousands of IOPS, making them suitable for database transaction logs and frequently accessed application data. Large-capacity HDDs cost perhaps £0.02 per gigabyte but provide only hundreds of IOPS, relegating them to backup, archival, and bulk storage applications. Sophisticated automated storage tiering systems monitor data access patterns and automatically move frequently used data to faster tiers whilst migrating inactive data to lower-cost storage.

Network

Networking infrastructure carries information between servers within racks, between racks, and ultimately to the global internet. The complexity of this networking hierarchy reflects the diverse performance requirements — from microsecond-sensitive trading applications to petabyte-scale bulk data transfers.

Server-Level Networking

Network Interface Cards (NICs) serve as the fundamental connection point between servers and the broader network infrastructure. Modern data centre servers typically include multiple NICs to provide redundancy, load distribution, and connection to different network segments simultaneously. Intel’s E810 series and Broadcom’s NetXtreme represent current-generation NICs supporting speeds of 25Gbps, 50Gbps, and 100Gbps per port whilst incorporating hardware acceleration for encryption, packet processing, and network virtualisation functions.

Host Channel Adapters (HCAs) enable servers to connect to high-performance interconnects like InfiniBand and Ethernet-based Remote Direct Memory Access (RDMA) networks. Companies like Mellanox (now part of NVIDIA) and Intel produce HCAs that enable ultra-low-latency communication between servers, essential for high-performance computing clusters and distributed storage systems. These adapters can transfer data directly between server memory systems without involving the main CPU, dramatically reducing both latency and processor overhead.

Smart NICs and Data Processing Units (DPUs) incorporate dedicated processors that can handle network packet processing, security functions, and storage protocols independently of the main server CPU. NVIDIA’s BlueField and Intel’s Mount Evans IPUs exemplify this trend, enabling servers to offload networking tasks whilst improving overall system security and performance.

Rack-Level Network Distribution

Top-of-Rack (ToR) switches serve as the primary aggregation point for all servers within a rack, typically providing 24-48 ports at speeds ranging from 10Gbps to 100Gbps per port. Vendors like Cisco, Arista, and Juniper dominate this market with switches designed specifically for data centre environments. These switches must handle enormous aggregate bandwidth — a fully populated rack might generate several terabits per second of internal traffic that must be managed, prioritised, and forwarded appropriately.

Cabling infrastructure within racks must balance performance, density, and maintainability requirements. Direct Attach Copper (DAC) cables provide cost-effective short-distance connections within racks, while fibre optic connections enable longer distances and higher speeds.

Advanced cabling systems implement Structured Cabling Standards like TIA-942 that define pathway management, connector types, and testing procedures. These standards ensure that cabling installations can support equipment changes and upgrades without requiring complete rewiring of facilities.

Facility-Wide Networking

Core switches and routers form the backbone of data centre networking, aggregating traffic from hundreds of racks and providing connections to external networks. These systems must handle aggregate traffic measured in multiple terabits per second whilst maintaining microsecond-level forwarding performance. Cisco’s Nexus series, Arista’s 7800 series, and Juniper’s QFX series represent current state-of-the-art in data centre core networking.

Spine-and-leaf architectures have largely replaced traditional hierarchical networks in modern data centres. In this topology, every rack-level “leaf” switch connects directly to multiple “spine” switches, creating multiple parallel paths for traffic flow. This architecture provides predictable performance, eliminates bottlenecks, and enables horizontal scaling by adding additional spine switches as the facility grows. The result is that any server can communicate with any other server through a maximum of three network hops, providing consistent low-latency performance across the entire facility.

External Connectivity

Internet Exchange Points (IXPs) provide critical interconnection services where data centres connect to multiple internet service providers, content delivery networks, and other network operators. Example major facilities include London Internet Exchange (LINX) and Amsterdam Internet Exchange (AMS-IX).

Submarine cable connections link data centres to international networks, with major facilities often located near submarine cable landing points to minimise latency for global communications. The TeleGeography Submarine Cable Map illustrates the physical infrastructure that enables global internet connectivity, highlighting why data centres cluster in coastal regions with access to multiple international cable systems.

WAN (Wide Area Network) connections link distributed data centre facilities belonging to the same organisation or cloud provider. These private networks often use MPLS (Multi-Protocol Label Switching) or SD-WAN technologies to provide guaranteed performance and security for inter-facility communications. Major cloud providers operate extensive private networks — Google’s private network spans over 100 points of presence worldwide, whilst Amazon’s backbone network connects AWS regions through dedicated fibre connections.

Specialised Networking Requirements

Low-latency networks for financial trading applications require specialised equipment and techniques that prioritise speed over other considerations. Solarflare (now part of AMD/Xilinx) and Mellanox produce NICs with hardware acceleration that can process and forward packets in under a microsecond. These networks often implement dedicated protocols like SolarCapture that bypass traditional network stacks to achieve minimum possible latency.

High-Performance Computing (HPC) networks use technologies like InfiniBand that provide both ultra-low latency and enormous aggregate bandwidth for parallel computing applications. NVIDIA’s InfiniBand switches can deliver over 100 terabits per second of aggregate bandwidth with end-to-end latencies measured in hundreds of nanoseconds, enabling supercomputers to coordinate calculations across thousands of processors simultaneously.

Power

Power systems in data centres are one of the most critical and complex aspects of facility design, as any interruption can instantly disable thousands of servers and cost millions in lost revenue. A large hyperscale facility might require 100-500 megawatts continuously, equivalent to powering a medium-sized city.

Power Distribution Infrastructure

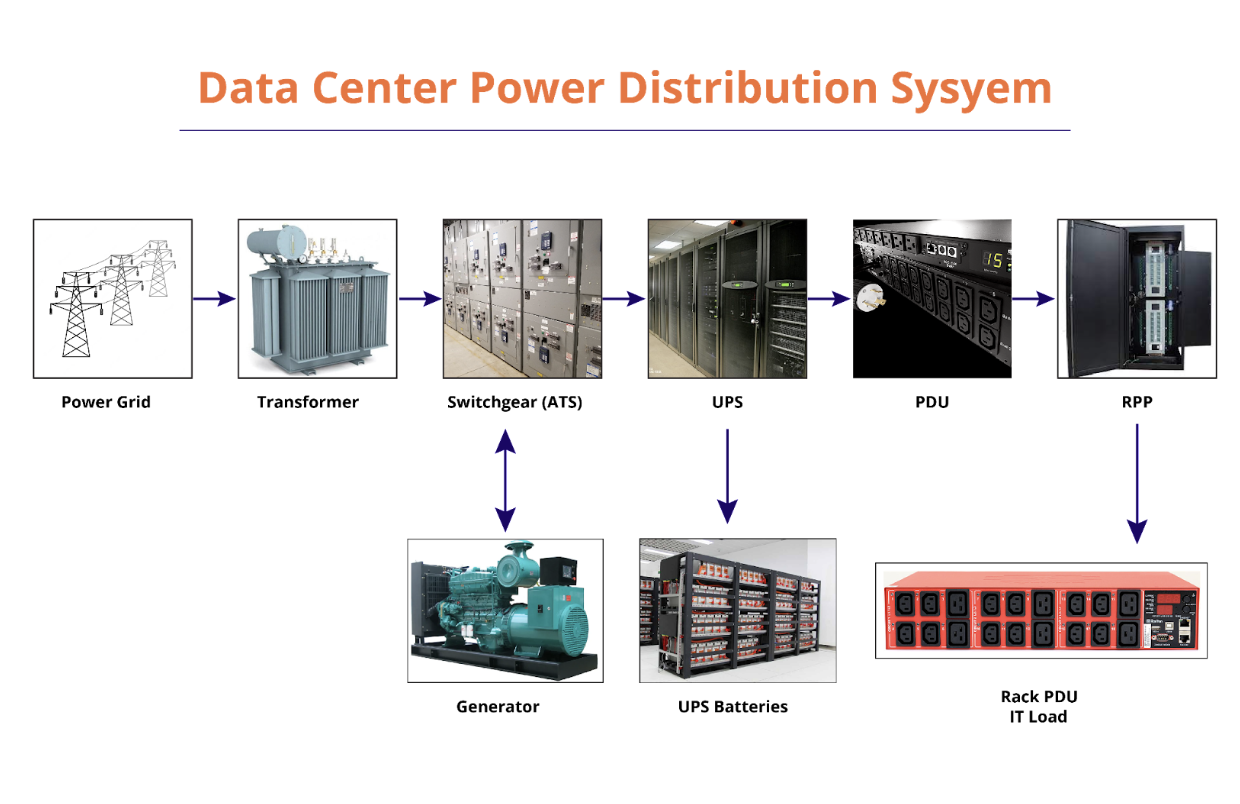

Transformers and switchgear form the foundation of data centre electrical systems, stepping down high-voltage transmission lines. Utility power typically arrives at data centres at voltages between 11kV and 138kV before being transformed through multiple stages to the 400V three-phase power commonly supplied to into racks. ABB and Schneider Electric manufacture specialized transformers designed for the typical continuous high-load operation.

Advanced switchgear systems provide protection, isolation, and control capabilities essential for maintaining power quality and safety. Modern installations incorporate arc flash protection, sophisticated monitoring systems, and automated switching capabilities that can isolate fault conditions within milliseconds to prevent cascading failures across the facility.

Power Distribution Units (PDUs) distribute electricity from facility-level systems to individual racks and servers. Modern intelligent PDUs like Raritan’s PX series and Server Technology’s PDUs provide sophisticated monitoring and control capabilities. These units can measure power consumption at individual outlet levels, implement remote power cycling for unresponsive equipment, and integrate with facility management systems to provide real-time visibility into power utilisation patterns.

The evolution toward higher power densities has driven development of innovative PDU technologies. Three-phase PDUs enable more efficient power distribution for high-density racks, whilst busway power distribution systems provide flexible, high-capacity power distribution that can be reconfigured as data centre layouts evolve.

Backup Power Systems

Uninterruptible Power Supplies (UPS) provide the critical bridge between utility power failures and backup generator startup. Enterprise-grade UPS systems like Schneider Electric’s Galaxy series and Eaton’s 9395 series can provide power for 10-15 minutes during utility outages whilst backup generators start and stabilise. Modern UPS systems incorporate lithium-ion batteries that provide longer runtime, faster charging, and smaller physical footprints compared to traditional lead-acid batteries.

The architecture of UPS systems affects both reliability and efficiency. N+1 redundancy configurations maintain spare UPS capacity that can handle the full facility load even if one unit fails, whilst 2N redundancy provides completely separate, parallel UPS systems.

Backup generators provide long-term power generation capability during extended utility outages. Modern data centre generators typically use diesel fuel and can operate continuously for days or weeks. Caterpillar, Cummins, and Generac manufacture generators specifically designed for data centre applications, incorporating features like automatic load bank testing, sophisticated monitoring systems, and paralleling switchgear that enables multiple generators to share facility loads.

Generator fuel must be carefully managed. Large data centres maintain on-site fuel storage measured in tens of thousands of litres, with contracts ensuring fuel delivery during extended emergency situations. Some facilities incorporate natural gas generators that can operate indefinitely without fuel delivery, though this requires reliable gas pipeline infrastructure.

Power Monitoring and Management

Power Monitoring and Management Systems provide real-time visibility into electrical consumption, power quality, and system health. Platforms like Schneider Electric’s StruxureWare and Eaton’s Power Xpert integrate data from UPS systems, PDUs, generators, and environmental sensors to provide comprehensive facility oversight.

These systems enable sophisticated power management strategies including demand response participation where data centres can reduce power consumption during peak grid demand periods, power capping that limits server power consumption to prevent overloading electrical infrastructure, and predictive analytics that can identify potential equipment failures before they occur.

Renewable and Local Power Generation

The massive power requirements of data centres have driven significant investment in renewable energy sources. Major cloud providers have become among the largest corporate purchasers of renewable energy globally. Google achieved 100% renewable energy matching for its global operations, whilst Microsoft has committed to being carbon negative by 2030.

Solar power installations are increasingly common at data centre sites, with facilities incorporating both rooftop and adjacent ground-mounted photovoltaic arrays. Apple’s data centre in North Carolina includes a 100-acre solar installation, whilst Facebook’s facilities often incorporate solar power as a primary energy source.

Wind power provides another renewable energy source, particularly effective in regions with consistently high winds. Data centres increasingly locate in areas with abundant wind generation, signing long-term power purchase agreements with wind farms to ensure renewable energy supply.

Some facilities explore more exotic renewable technologies. Microsoft’s Project Natick investigated underwater data centres that could potentially be powered by offshore wind and tidal generation, whilst Google has invested in advanced geothermal projects that could provide baseload renewable power. Rolls Royce expects to deploy Small Modular Reactors (small-scale nuclear power) to provide local power generation at new AI-focused facilities.

Power Budgets and Constraints

Power budgeting in modern data centres reflects a fundamental shift in operational constraints. Historically, data centres were constrained by server density, cooling capacity, or physical space.

While it has been widely said that power consumption has become the primary limiting factor for many facilities, the latest trend driven by AI appears to have reversed this. The old motto returns: “If you can cool it, you can power it.” Power is now effectively unlimited.

A single AI training server equipped with 8 NVIDIA H100 GPUs can consume over 10 kilowatts continuously — 20 times more power than the power draw of a typical home.

Modern electrical distribution systems can deliver enormous amounts of power to individual racks — some installations support over 100 kilowatts per rack. The challenge becomes removing the heat generated by this power consumption rather than delivering the electricity itself.

This dynamic has profound implications for data centre design and operations. Facilities must balance the higher revenue potential of power-hungry AI workloads against the infrastructure investments required to support them. Some operators are experimenting with dynamic power allocation systems that can shift power between different facility areas based on workload requirements, enabling more flexible utilisation of available electrical capacity.

Power Usage Effectiveness (PUE) metrics have become standard measurements for data centre efficiency, calculated as the ratio of total facility power consumption to IT equipment power consumption. Leading facilities achieve PUE ratios below 1.2, indicating that for every kilowatt consumed by computing equipment, less than 0.2 kilowatts are consumed by cooling, lighting, and other facility systems. Google’s data centres achieve industry-leading PUE of 1.05 through advanced cooling technologies and AI-powered optimization systems.

Cooling

Cooling systems represent one of the most critical and power-hungry aspects of data centre infrastructure, as modern computing equipment generates enormous amounts of heat that must be continuously removed to prevent performance degradation and hardware failure. The challenge intensifies as server densities increase — a single rack consuming 30-50 kilowatts of power will generate an equivalent amount of heat that must be extracted and dissipated to the environment.

Server-Level Cooling

Heat sinks and fans form the first line of thermal management within individual servers. Server fans operate at higher speeds and move substantially more air than desktop computer fans, but they create significant acoustic challenges when deployed at scale.

A single rack containing 42 servers might house over 400 individual fans, generating noise levels that require hearing protection for maintenance personnel. Advanced server designs incorporate variable speed fan control that adjusts fan speeds based on component temperatures and system loads, balancing cooling performance against noise generation and power consumption.

Liquid cooling systems within servers are becoming increasingly common for high-performance applications. All-in-One (AIO) coolers use closed-loop systems with coolant circulating between processors and radiators within individual servers. More advanced implementations use direct-to-chip cooling where liquid coolant flows through cooling plates mounted directly onto processors, providing dramatically superior heat removal compared to air cooling. Intel and AMD are experimenting with processors specifically designed for liquid cooling applications.

The transition to liquid cooling becomes essential for AI and high-performance computing workloads. A single NVIDIA H100 GPU generates up to 700 watts of heat in a space smaller than a paperback book — heat densities that simply cannot be managed with traditional air cooling. NVIDIA’s DGX systems and similar high-performance systems rely entirely on liquid cooling to maintain acceptable operating temperatures.

Rack-Level Cooling

Rack-level cooling systems provide targeted cooling for entire server racks, particularly effective for high-density installations where traditional facility cooling cannot provide adequate capacity. Rear-door heat exchangers mount behind server racks and use chilled water to cool the hot air exhausted by servers before it enters the general facility environment. Companies like CoolIT Systems and Asetek manufacture rack-level cooling systems that can remove 30-50 kilowatts of heat per rack.

In-rack cooling distribution units provide chilled water distribution to liquid-cooled servers within racks whilst monitoring temperatures and flow rates to ensure proper cooling system operation. These systems integrate with facility management platforms to provide real-time visibility into cooling performance and enable predictive maintenance to prevent cooling system failures.

Facility-Level Cooling

Computer Room Air Conditioning (CRAC) units provide the traditional approach to data centre cooling, using direct expansion refrigeration systems to cool air within data centre spaces. Modern CRAC units like those manufactured by Liebert (now Vertiv) and Schneider Electric provide precise temperature and humidity control whilst offering high reliability through redundant components and advanced monitoring systems.

Air Handling Units (AHUs) represent a more sophisticated approach, using chilled water from central cooling plants rather than direct refrigeration. AHUs can achieve higher efficiency and provide greater cooling capacity than CRAC units, making them suitable for larger installations. The separation of cooling generation (chillers) from air handling enables more flexible facility design and improved operational efficiency.

Hot and cold aisle containment systems revolutionised data centre cooling efficiency by preventing mixing of hot exhaust air with cold supply air. Cold aisle containment encloses the fronts of server racks, ensuring that servers receive cool air directly from CRAC units without contamination from hot exhaust. Hot aisle containment encloses the rear of server racks, capturing hot exhaust air and directing it back to cooling systems without heating the general facility space.

These containment systems enable significant efficiency improvements — facilities can operate at higher supply air temperatures whilst maintaining proper server inlet temperatures, reducing cooling energy consumption by 20-40%. APC, Chatsworth Products, and other manufacturers provide modular containment systems that can be retrofitted to existing facilities.

Dissipating the heat

Chillers and cooling towers form the heart of large-scale data centre cooling systems. Water-cooled chillers use refrigeration cycles to chill water that circulates through the facility to remove heat from servers and cooling systems. Carrier, Trane, and Johnson Controls manufacture chillers specifically designed for data centre applications, providing high efficiency and reliability under continuous operation.

Cooling towers enable heat rejection to the external environment through evaporative cooling, providing much higher efficiency than air-cooled systems in most climates. Modern cooling towers incorporate advanced materials and designs that minimise water consumption whilst maximising heat rejection capacity. However, cooling towers require substantial water supplies and must be carefully managed to prevent biological contamination.

Free cooling systems take advantage of cool ambient temperatures to reduce mechanical cooling requirements. Air-side economisers bring outside air into data centres when external temperatures are sufficiently low. Similar exists for water-side economisers. These systems can provide enormous energy savings in cooler climates — facilities in northern Europe or Canada can operate with minimal mechanical cooling for significant portions of the year.

Exotic and Experimental Cooling Approaches

Underwater data centres represent perhaps the most radical approach to cooling challenges. Microsoft’s Project Natick deployed prototype data centres on the ocean floor, using seawater for cooling whilst potentially achieving superior reliability due to the stable, controlled environment. The constant temperature of deep ocean water provides ideal conditions for electronic equipment, though the approach presents substantial challenges in deployment, maintenance, and equipment access.

Heat recovery and reuse systems capture waste heat from data centres for beneficial use rather than simply rejecting it to the environment. District heating systems in northern European cities like Helsinki and Copenhagen capture data centre waste heat to provide residential and commercial heating. This approach can achieve remarkable overall efficiency by using what would otherwise be waste heat for productive purposes.

Swimming pool heating represents a more modest heat recovery application. Some smaller data centres use heat exchangers to transfer waste heat to swimming pools, providing free heating for recreational facilities. While the scale is limited compared to district heating systems, this approach demonstrates the potential for beneficial heat reuse in appropriate applications.

Hot-sand batteries are a new form of thermal battery that offer an alternative to lithium-ion batteries and which may be able to efficiently recover heat from data centres, for use in subsequent power generation (effectively turning some of the heat output back into electricity).

Fire Prevention & Suppression

Fire protection in data centres requires systems that can rapidly detect and suppress fires whilst minimising damage to sensitive electronic equipment. Traditional water-based sprinkler systems, whilst effective for conventional buildings, can cause more damage than the fires they’re designed to suppress when applied to highly valuable computing equipment.

Detection Systems

Early warning fire detection systems in data centres employ multiple detection technologies working in concert to identify potential fire conditions before visible flames or significant smoke production. Very Early Smoke Detection Apparatus (VESDA) systems, manufactured by companies like Xtralis (now part of Honeywell), continuously sample air from throughout the facility and can detect smoke concentrations thousands of times lower than conventional smoke detectors. These systems provide warnings of potential fire conditions 10-60 minutes before traditional detection methods.

Multi-sensor detection systems combine smoke, heat, and sometimes carbon monoxide sensors to reduce false alarms whilst maintaining rapid response capability. The challenge in data centre environments lies in distinguishing between normal operational conditions — which may include warm air currents, dust from equipment operation, and occasional component failures producing minor amounts of smoke — and genuine fire emergencies requiring immediate response.

Advanced detection systems incorporate environmental monitoring that considers factors like airflow patterns, equipment locations, and normal thermal signatures to provide context for alarm conditions. This intelligence helps facility operators distinguish between minor equipment failures that require maintenance attention and genuine emergency situations demanding immediate evacuation and suppression system activation.

Clean Agent Fire Suppression

Clean agent fire suppression systems use gaseous suppression agents that extinguish fires without leaving residue that could damage electronic equipment. FM-200 (heptafluoropropane) and Novec 1230 represent the current generation of clean agents, designed to replace older halon systems that were phased out due to environmental concerns. Companies like Kidde and Johnson Controls manufacture systems specifically designed for data centre applications.

These systems work by reducing oxygen levels or absorbing heat to break the chemical chain reaction necessary for combustion. Clean agents can suppress fires within 10-30 seconds of discharge whilst remaining safe for personnel who might be present during activation. The systems must be carefully engineered to account for room volume, air leakage rates, and minimum safe concentrations for human occupancy.

Pre-action sprinkler systems serve as backup to clean agent systems, requiring two independent detection events before water discharge. This dual-detection requirement dramatically reduces the risk of accidental water damage from spurious alarms whilst maintaining the reliability of water-based suppression for situations where clean agents might prove insufficient.

Water-Based Systems

Water mist systems provide an alternative approach that uses very fine water droplets to suppress fires whilst minimising water damage compared to traditional sprinklers. Systems from manufacturers like Marioff and Danfoss create water droplets measuring 50-200 microns that can effectively suppress fires whilst using significantly less water than conventional sprinkler systems.

The fine water mist provides several advantages for data centre applications: the small droplets absorb heat more efficiently than larger water drops, the reduced water volume minimises flooding damage, and the mist can penetrate into equipment enclosures more effectively than conventional water sprays. However, water mist systems require higher pressure pumping systems and more complex piping compared to traditional sprinklers.

Suppression System Integration

Modern fire suppression systems integrate with facility management platforms to provide coordinated emergency response. Upon fire detection, these systems can automatically shut down air conditioning systems to prevent smoke circulation, activate emergency lighting and communication systems, initiate orderly server shutdown procedures for non-critical systems, and notify emergency services with detailed facility information.

Zoned suppression systems enable targeted response to fire incidents, suppressing fires in affected areas whilst maintaining normal operations in unaffected portions of the facility. This compartmentalisation becomes critical for large data centres where a localised equipment fire shouldn’t trigger facility-wide shutdowns that could affect millions of users and cost enormous sums in lost revenue.

The integration extends to smoke management systems that use positive and negative pressure zones to control smoke movement, ensuring that smoke from a fire in one area doesn’t migrate to other parts of the facility. These systems coordinate with fire suppression to provide clear evacuation routes whilst preventing smoke damage to equipment in unaffected areas.

Physical Security

Physical security in data centres goes beyond simple access control, encompassing multiple layers of protection designed to prevent unauthorised access, detect intrusion attempts, and respond to security incidents. The concentrated value of computing equipment — individual racks worth hundreds of thousands of pounds — combined with the sensitive data they process makes data centres attractive targets for both cybercriminals and state-sponsored actors.

Perimeter Security

Physical barriers form the outermost security layer, with most data centres implementing reinforced perimeter fencing designed to delay and deter potential intruders. Modern installations use palisade fencing or anti-climb mesh fencing that incorporates features like razor wire, anti-cut materials, and intrusion detection sensors. Companies like Zaun and Jacksons Fencing manufacture specialised security fencing systems designed specifically for critical infrastructure protection.

Vehicle barriers protect against ramming attacks and unauthorised vehicle access. Bollards, rising barriers, and hostile vehicle mitigation systems can stop vehicles weighing several tonnes. The Centre for the Protection of National Infrastructure (CPNI) provides guidance on vehicle security barriers appropriate for different threat levels and facility requirements.

Landscape design contributes to security by eliminating hiding places and ensuring clear sight lines for surveillance systems. Defensive landscaping uses thorny vegetation, strategic lighting, and carefully planned access routes to channel visitors through controlled entry points whilst making covert approach difficult. The approach requires balancing security requirements with aesthetic considerations and local planning constraints.

Building Envelope Security

Reinforced construction protects against forced entry attempts and environmental threats. Data centre buildings typically feature reinforced concrete walls, limited windows (often eliminated entirely), and strengthened roof structures designed to resist both deliberate attack and severe weather events. Structural steel reinforcement and reinforced concrete systems provide the foundation for secure building envelopes.

Security doors and airlocks control access through building entrances whilst providing protection against tailgating and forced entry. Blast-resistant doors manufactured by companies like Dynasafe and Steel Security Doors can withstand significant explosive forces whilst maintaining normal operational access. These systems often incorporate bullet-resistant materials and anti-tamper hardware designed to delay sophisticated attack attempts.

Window security becomes critical for facilities that include windows in their design. Blast-resistant and bullet-resistant glass systems provide protection whilst maintaining visibility for normal operations. (Most modern data centres eliminate windows entirely to improve both security and energy efficiency.)

Access Control Systems

Multi-factor authentication systems require multiple forms of identification before granting facility access. Typical implementations combine proximity cards or smart cards with biometric verification such as fingerprint scanning, iris recognition, or facial recognition systems. Companies like HID Global, ASSA ABLOY, and Keenfinity manufacture enterprise-grade access control systems designed for critical infrastructure applications.

Mantrap entry systems prevent unauthorised personnel from following authorised users through secured entry points. These systems require successful authentication at each door in sequence, with only one door able to open at any time. Advanced mantrap systems incorporate weight sensors, occupancy detection, and biometric verification to ensure that only authorised individuals gain access to secured areas.

Zone-based access control implements different security levels throughout facilities, ensuring that personnel can only access areas appropriate to their roles and clearance levels. Server areas typically require higher clearance than general office areas, whilst critical infrastructure spaces like power and cooling equipment may require additional authorisation levels. This defence-in-depth approach ensures that compromise of outer security layers doesn’t automatically provide access to the most sensitive facility areas.

Surveillance and Detection Systems

CCTV systems provide comprehensive visual monitoring of both exterior and interior facility areas. Modern systems use IP cameras with high-definition video recording, night vision capabilities, and intelligent analytics that can automatically detect suspicious behaviour patterns. Axis Communications, Hikvision, and Keenfinity manufacture professional surveillance systems designed for 24/7 operation in critical facility environments.

Intelligent video analytics enhance surveillance effectiveness by automatically identifying potential security incidents. These systems can detect loitering behaviour, perimeter breaches, abandoned objects, and tailgating attempts without requiring constant human monitoring. Machine learning algorithms continuously improve detection accuracy whilst reducing false alarms that could overwhelm security personnel.

Motion detection systems use various technologies including passive infrared (PIR) sensors, microwave sensors, and dual-technology sensors that combine multiple detection methods to reduce false alarms. These systems provide early warning of intrusion attempts and can trigger automated responses such as lighting activation, alarm notifications, and security personnel dispatch.

Intrusion detection systems monitor doors, windows, and other potential entry points for unauthorised access attempts. Magnetic contact sensors, glass break detectors, and vibration sensors can detect forced entry attempts whilst distinguishing between genuine security threats and normal facility operations such as maintenance activities or weather-related building movement.

Security Integration and Response

Security Operations Centres (SOCs) provide centralised monitoring and response coordination for multiple facility security systems. These facilities are staffed 24/7 by trained security personnel who monitor surveillance systems, respond to security alerts, and coordinate with emergency services when required. Many data centre operators maintain multiple SOCs to provide redundancy and ensure continuous security monitoring even during facility maintenance or emergency situations.

Integration with emergency services ensures rapid response to serious security incidents. Many data centres maintain direct communication links with local police and emergency services, providing facility layout information, access procedures, and emergency contact details that enable effective incident response. Some facilities participate in priority response programs that ensure immediate emergency service attention during critical incidents.

People

Despite the extensive automation and remote management capabilities of modern data centres, human expertise remains essential for maintaining, securing, and operating these complex facilities. The specialised nature of data centre infrastructure requires teams with diverse technical skills, from electrical engineering and network administration to physical security and emergency response.

Facilities Management Teams

Data centre managers oversee day-to-day facility operations, coordinating between technical teams, managing vendor relationships, and ensuring compliance with operational procedures and regulatory requirements. These professionals typically hold qualifications in engineering, facilities management, or related technical fields, with specialised knowledge of critical infrastructure systems. Professional certifications from organisations like Schneider Electric (DCCA) provide industry-recognised credentials for data centre management professionals.

Electrical engineers and technicians maintain power distribution systems, UPS units, generators, and electrical safety systems. These specialists require extensive knowledge of high-voltage electrical systems, power quality analysis, and electrical safety procedures. Many hold certifications from professional engineering bodies such as the Institution of Engineering and Technology (IET) and maintain specialised qualifications in areas like arc flash safety and high-voltage work permits.

Mechanical engineers and HVAC technicians manage cooling systems, air handling units, chillers, and environmental controls. The complexity of modern data centre cooling systems — particularly those incorporating liquid cooling, economiser systems, and advanced controls — requires deep technical expertise in thermodynamics, fluid mechanics, and control systems engineering. Professional qualifications from bodies like the Chartered Institution of Building Services Engineers (CIBSE) and Institute of Refrigeration provide the technical foundation for these roles.

Network and IT Operations

Network Operations staff provide 24/7 monitoring and management of network infrastructure, servers, and applications running within data centre facilities. These engineers monitor network performance, respond to alerts, coordinate maintenance activities, and serve as the first line of technical support for service incidents. Many such professionals hold industry certifications such as Cisco’s CCNA/CCNP, CompTIA Network+, and vendor-specific qualifications for the specific equipment deployed in their facilities.

System administrators and DevOps engineers manage the servers, storage systems, and applications that comprise the computing infrastructure. These professionals require expertise in server hardware, operating systems (Linux, Windows Server), virtualisation platforms, and increasingly, container orchestration and cloud-native technologies. Professional development often includes certifications from vendors like Red Hat, Microsoft, VMware, and cloud providers.

Database administrators maintain the database systems that underpin many applications hosted in data centres. These specialists require deep expertise in database technologies such as Oracle, Microsoft SQL Server, PostgreSQL, and MongoDB, as well as skills in performance optimisation, backup and recovery procedures, and security management.

Security Personnel

Physical security guards provide on-site presence and immediate response to security incidents, access control enforcement, and visitor management. Many security personnel hold qualifications from the Security Industry Authority (SIA) and receive specialised training in data centre security procedures, emergency response protocols, and the specific threats facing critical infrastructure facilities.

Security operations centre analysts monitor surveillance systems, access control logs, and security alerts from remote monitoring centres. These professionals require training in security technologies, incident response procedures, and coordination with law enforcement agencies. Professional development often includes certifications such as Certified Protection Professional (CPP) and Physical Security Professional (PSP).

Cybersecurity specialists focus on the digital security aspects of data centre operations, including network security monitoring, incident response, and coordination between physical and cyber security systems. These professionals typically hold qualifications such as Certified Information Systems Security Professional (CISSP), Certified Ethical Hacker (CEH), and vendor-specific security certifications.

Maintenance and Support Teams

Field service technicians perform scheduled maintenance, hardware repairs, and equipment installations across all facility systems. These professionals require broad technical skills spanning mechanical, electrical, and electronic systems, as well as vendor-specific training for the particular equipment deployed in their facilities. Many technicians hold qualifications from equipment manufacturers such as Schneider Electric, Dell EMC, and Cisco.

Emergency response teams coordinate incident response for facility emergencies, including power outages, equipment failures, security breaches, and natural disasters. Team members typically include representatives from facilities management, IT operations, security, and often external emergency services. Emergency response training includes incident command system protocols, business continuity procedures, and coordination with local emergency services.

Operational Considerations

Shift coverage ensures continuous facility monitoring and response capability. Most data centres operate with multiple shifts providing 24/7 coverage, with on-site presence during standard business hours and on-call response capability during off-hours. Follow-the-sun support models enable global operators to provide continuous coverage by coordinating teams across multiple time zones.

Skills development and training programs ensure staff maintain current expertise as data centre technologies evolve. Many operators provide ongoing education through vendor training programs, industry conferences, professional certifications, and internal knowledge sharing initiatives. The rapid pace of technological change in areas like AI infrastructure, edge computing, and sustainability requires continuous learning to maintain operational effectiveness.

Succession planning addresses the challenges of recruiting and retaining skilled personnel in competitive technical job markets. Many facilities cross-train staff across multiple systems to provide operational flexibility, whilst mentorship programs help transfer institutional knowledge from experienced professionals to newer team members.

The increasing sophistication of data centre automation and remote management capabilities is changing skill requirements rather than eliminating the need for human expertise. Modern data centre professionals require broader technical knowledge spanning multiple disciplines whilst developing skills in data analysis, automation tools, and advanced troubleshooting techniques that complement rather than compete with automated systems.

Standards

The design, construction, and operation of data centres are governed by an extensive framework of standards and certifications that ensure reliability, safety, efficiency, and interoperability. These standards span multiple disciplines — from electrical engineering and mechanical systems to cybersecurity and environmental management — reflecting the complex, multidisciplinary nature of modern data centre infrastructure.

I will highlight just a few of the standards. The complexity and breadth of applicable standards reflect the critical nature of data centre infrastructure in supporting modern digital services. Compliance with appropriate standards provides assurance of reliability, safety, and operational quality whilst enabling interoperability between systems from different vendors and operators.

As data centre technologies continue evolving — particularly in areas like AI infrastructure, edge computing, and sustainability — standards organisations are balancing innovation with proven reliability requirements to support continued advancement of digital infrastructure capabilities.

Facility and Infrastructure Standards

Tier Classification System from the Uptime Institute provides the most widely recognised framework for data centre reliability, defining four tiers based on redundancy levels and expected annual downtime. Tier I facilities provide basic infrastructure with 99.671% availability (28.8 hours annual downtime), whilst Tier IV facilities achieve 99.995% availability (26.3 minutes annual downtime) through comprehensive redundancy across all systems. This classification system enables operators to design facilities that match specific availability requirements whilst providing customers with clear service level expectations.

Hardware and Technology Standards

Open Compute Project (OCP) represents a collaborative approach to hardware standardisation, developing open-source designs for servers, storage systems, and networking equipment. OCP specifications enable more efficient hardware designs whilst reducing vendor lock-in and promoting innovation through shared development efforts. Major cloud providers including Facebook, Microsoft, and LinkedIn actively contribute to OCP specifications.

PCI-SIG standards define PCI Express interconnect specifications that govern high-speed connections between processors, accelerators, storage devices, and network interfaces. These standards ensure interoperability between components from different manufacturers whilst enabling the performance levels required for modern data centre workloads.

Security and Compliance Standards

SOC 2 Type II audits provide independent verification of security controls and operational procedures, covering security, availability, processing integrity, confidentiality, and privacy. These audits have become essential for data centre operators serving enterprise customers who require documented assurance of security and operational controls.

ISO 27001 certification demonstrates implementation of comprehensive information security management systems, covering both physical and logical security measures. This certification provides assurance that data centre operators maintain systematic approaches to identifying, assessing, and mitigating security risks across all facility operations.

GDPR (General Data Protection Regulation) and similar data protection regulations impose specific requirements on data centre operators handling personal data, including data residency requirements, breach notification procedures, and individual privacy rights. Compliance requires coordination between technical infrastructure capabilities and operational procedures to ensure regulatory requirements are met.

Regional and Jurisdictional Requirements

Data sovereignty regulations increasingly mandate that certain types of data remain within specific geographic jurisdictions, driving requirements for local data centre capacity and compliance with national security regulations. These requirements significantly influence facility location decisions and operational procedures for international data centre operators.

Emerging Location Trends

Edge computing locations prioritise proximity to users over traditional infrastructure advantages, driving deployment of smaller facilities in urban areas, retail locations, and telecommunications infrastructure. 5G networks require extensive edge computing infrastructure positioned close to cellular base stations, creating demand for micro data centres in locations that would be unsuitable for traditional facilities.

Space-based data centres remain largely theoretical but represent potential future locations that could provide global coverage, unlimited solar power, and natural cooling in the vacuum of space. While current technology limitations make this impractical, advancing space technology could eventually enable orbital data centre deployment.

The optimal data centre location balances multiple competing factors including latency requirements, energy costs, regulatory compliance, environmental sustainability, and operational considerations. As digital infrastructure requirements continue evolving, location strategies must adapt to accommodate new technologies, changing regulations, and growing environmental consciousness whilst maintaining the reliability and performance that underpin modern digital services.

Coming Next

In Part 3 of this series, we’ll explore the virtual structure of data centres - the abstractions which turn all the hardware from giant heaters into useful machines for the diverse range of workloads supported by modern data centres.

Series Navigation

- Part 1: Understanding Applications and Services

- Part 2: Physical Architecture of a Single Data Centre (this post)

- Part 3: Virtual Architecture of a Single Data Centre

- Part 4: Spanning Multiple Data Centres

- Part 5: Business Models and Economics

- Part 6: Evaluation and Future Trends

Glossary

Security fencing designed with closely spaced mesh or other features that prevent intruders from gaining handholds or footholds for climbing. Anti-climb fencing typically features small mesh openings and may include additional deterrent features like razor wire or anti-cut materials.

Critical infrastructure facilities use anti-climb fencing to create effective perimeter barriers that delay unauthorised access attempts whilst maintaining visibility for surveillance systems.

Back More...Safety systems designed to protect personnel and equipment from electrical arc flash incidents - dangerous releases of energy that can occur during electrical faults. Arc flash protection includes specialized clothing, warning systems, and circuit protection devices.

Data centre electrical systems require comprehensive arc flash analysis and protection due to the high currents and voltages involved in power distribution to computing equipment.

Back More...Safety protocols and equipment designed to protect personnel from electrical arc flash incidents - dangerous releases of energy that occur during electrical faults. Arc flash safety includes specialized clothing, warning systems, training programs, and equipment design that minimises arc flash risks.

Data centre electrical work requires comprehensive arc flash safety programs due to the high energy levels present in power distribution systems serving computing equipment.

Back More...Technology that automatically moves data between different storage media based on access patterns and performance requirements. Frequently accessed data migrates to high-performance storage whilst inactive data moves to lower-cost storage tiers.

Automated tiering optimises storage costs by ensuring that expensive high-performance storage contains only actively used data whilst maintaining accessibility of archived information.

Back More...Modular server design where multiple server units (blades) fit into a shared chassis that provides power, cooling, networking, and management infrastructure. Blade systems achieve higher density than traditional rack-mount servers by sharing infrastructure components.

A single blade chassis can house 10-16 server blades in the space that would normally accommodate 2-4 traditional rack-mount servers.

Back More...Prefabricated electrical distribution system using enclosed bus bars to distribute power throughout facilities. Busway systems provide flexible, high-capacity power distribution that can be easily reconfigured as facility requirements change.

Data centres use busway systems to provide reliable power distribution to server racks whilst enabling easy moves, adds, and changes as equipment configurations evolve.

Back More...Security strategy that implements multiple layers of protection rather than relying on any single security measure. Each layer provides independent protection, ensuring that compromise of one security control doesn't provide complete access to protected resources.

Data centres implement defence in depth through perimeter security, building access controls, internal zone restrictions, equipment-level security, and digital access controls working together as an integrated security system.

Back More...Server motherboard design that accommodates two separate CPU sockets, enabling installation of two processors to increase computational capacity. Dual-socket configurations provide higher core counts, memory capacity, and I/O bandwidth compared to single-socket designs.

Modern dual-socket servers can support processors with 64+ cores each, providing over 128 cores of processing power in a single system whilst sharing memory and storage resources.

Back More...Memory technology that can detect and automatically correct single-bit errors, preventing data corruption in server and workstation applications. ECC memory includes additional memory chips that store parity information used to identify and fix memory errors.

Enterprise servers require ECC memory to prevent data corruption that could cascade through applications and databases, providing higher reliability than consumer-grade non-ECC memory.

Back More...Unwanted electromagnetic signals that can disrupt the operation of electronic equipment. In data centres, EMI can cause data corruption, network errors, and system instability if not properly controlled through cable management and shielding.

Proper separation of power and data cables, use of shielded cables, and structured cabling systems help minimise EMI in dense computing environments.

Back More...Support model where work responsibilities pass between teams in different time zones to provide continuous coverage without requiring individual staff to work outside normal business hours. Follow-the-sun support enables global organisations to provide 24/7 service whilst maintaining reasonable work schedules.

Global data centre operators use follow-the-sun models to provide continuous monitoring and support across multiple regions, with responsibilities transferring between Asia-Pacific, European, and American support teams as business hours change.

Back More...Passive cooling components that transfer heat away from processors and other components through conductive and convective heat transfer. Heat sinks use metal fins or other structures to increase surface area for heat dissipation to the surrounding air.

High-performance servers often use large heat sinks combined with powerful fans, while some applications require liquid cooling systems for more efficient heat removal.

Back More...Enterprise hard disk drives that replace air with helium gas inside the sealed drive enclosure. Helium's lower density reduces drag on spinning disks, enabling higher capacities, lower power consumption, and improved reliability.

Helium-filled drives can accommodate more disk platters in the same enclosure size, achieving capacities exceeding 20TB while maintaining enterprise reliability specifications.

Back More...Advanced vector processing instructions that enable processors to perform multiple mathematical operations simultaneously on large data sets. AVX-512 can process 512-bit vectors in a single instruction, dramatically accelerating mathematical computations.

Scientific computing, AI training, and financial modelling applications benefit significantly from AVX-512 acceleration, though the technology increases power consumption and heat generation.

Back More...Hardware-based security technology that provides a trusted foundation for launching operating systems and hypervisors. TXT creates a measured boot environment where system integrity can be cryptographically verified.

Enterprise data centres use TXT to establish chains of trust that help prevent rootkits and other low-level security threats from compromising server systems.

Back More...Physical infrastructure where multiple internet service providers, content delivery networks, and other network operators interconnect to exchange traffic. IXPs improve internet performance by enabling direct peering rather than routing traffic through third-party networks.

Major IXPs like London Internet Exchange (LINX) and Amsterdam Internet Exchange (AMS-IX) handle hundreds of terabits per second of traffic, making them critical infrastructure for regional internet connectivity.

Back More...Advanced cooling systems that use circulated coolant (typically water or specialised fluids) to remove heat directly from components. Liquid cooling is more efficient than air cooling and essential for high-density computing environments like AI training clusters.

Modern data centres use both closed-loop systems for individual servers and facility-wide chilled water systems for rack-level cooling distribution.

Back More...Video analytics technology that automatically identifies when individuals remain in specific areas longer than normal patterns would suggest. Loitering detection helps security personnel identify potential reconnaissance activities or other suspicious behaviour around critical facilities.